|

Haoqi Fan Researcher in Computer Vision |

About Me

|

Haoqi Fan is an AI Researcher who works on multimodal foundational models. He spent 7 years at Facebook AI Research (FAIR). He graduated from the Robotics Institute of Carnegie Mellon University. His research interests lie in computer vision and deep learning. |

Recent News

|

|

|

|

|

|

|

|

|

Selected Publications

|

Emerging Properties in Unified Multimodal Pretraining Chaorui Deng∗, Deyao Zhu∗, Kunchang Li∗, Chenhui Gou∗, Feng Li∗, Zeyu Wang, Shu Zhong, Weihao Yu, Xiaonan Nie, Ziang Song, Guang Shi§, Haoqi Fan∗† Paper website code huggingface |

|

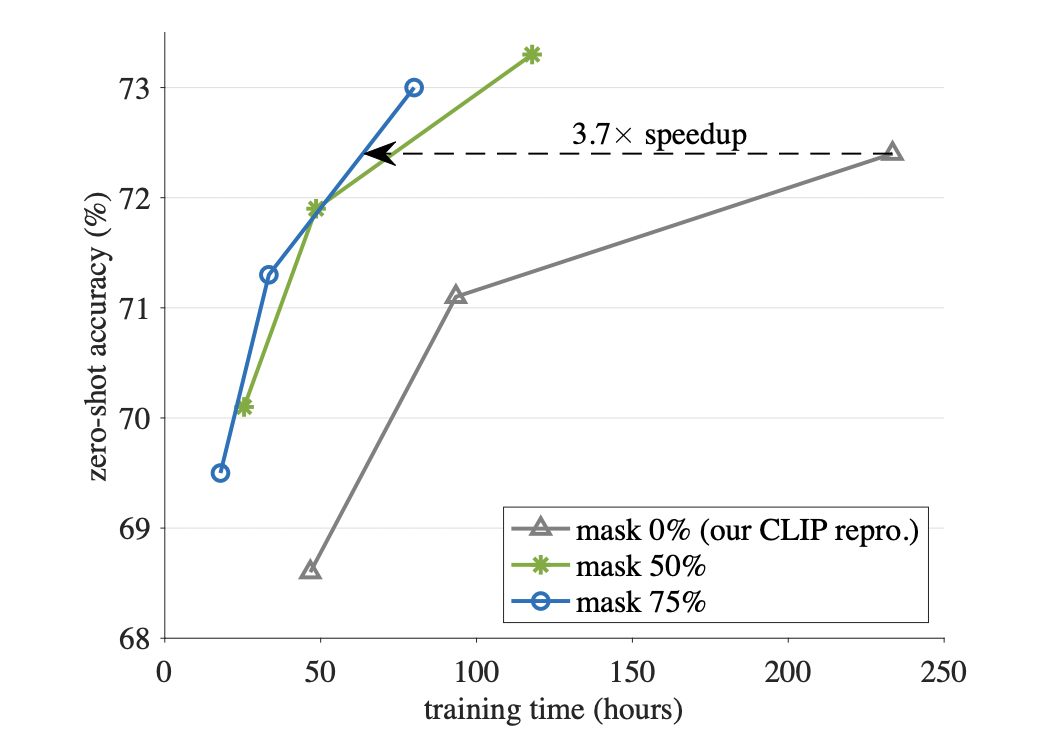

Scaling Language-Image Pre-training via Masking Yanghao Li*, Haoqi Fan*, Ronghang Hu*, Christoph Feichtenhofer†, Kaiming He† Arxiv, 2022 Paper |

|

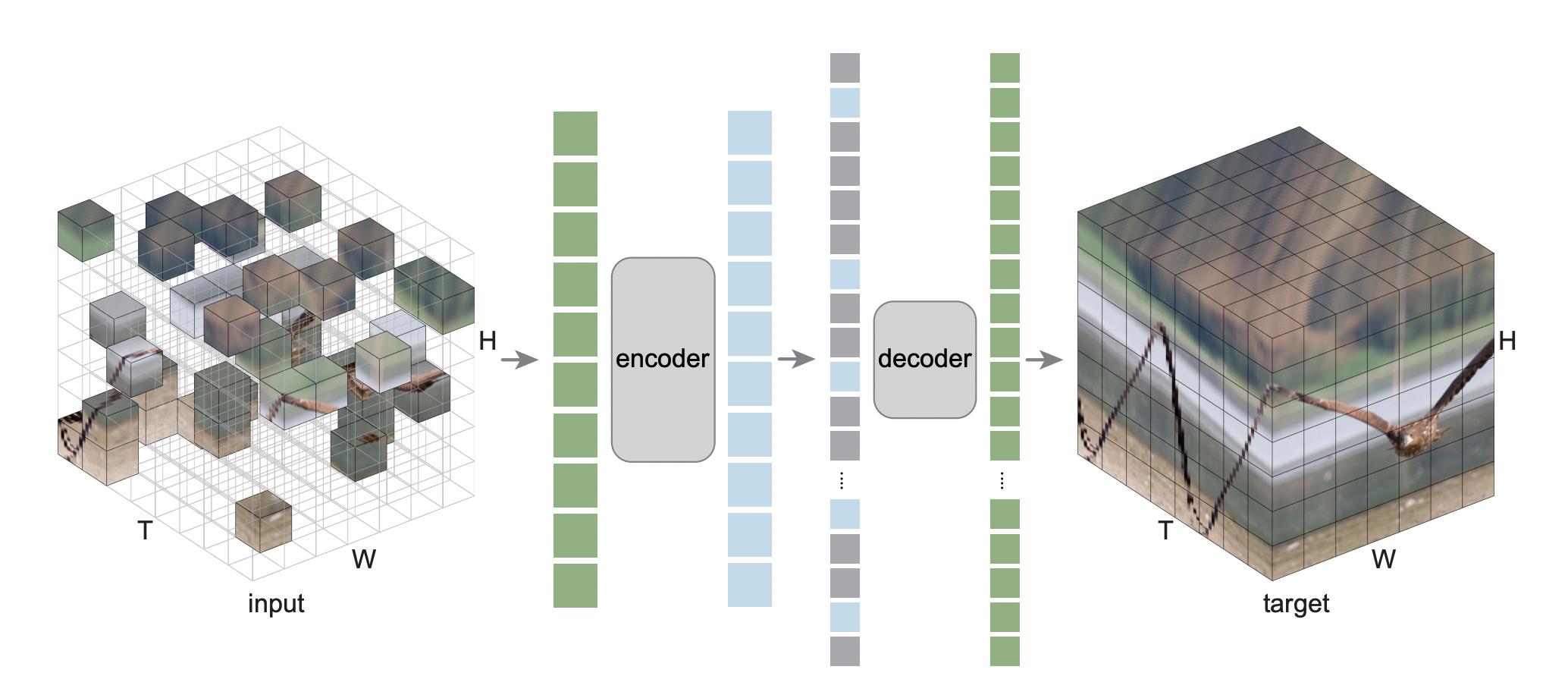

Masked Autoencoders As Spatiotemporal Learners Christoph Feichtenhofer*, Haoqi Fan*, Yanghao Li, Kaiming He NeurIPS, 2022 Paper code |

|

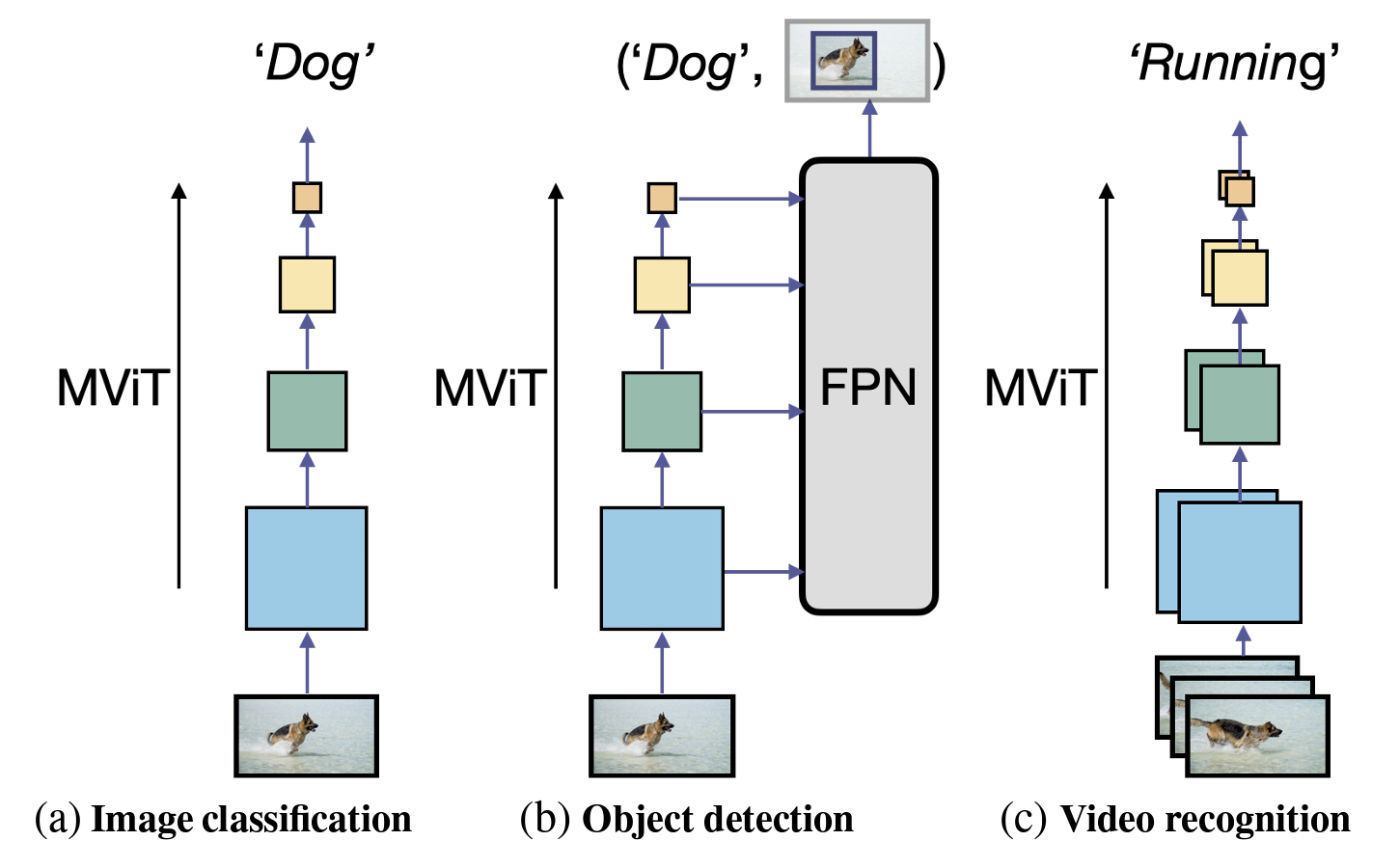

Multiscale Vision Transformers Haoqi Fan*, Bo Xiong*, Karttikeya Mangalam*, Yanghao Li*, Zhicheng Yan, Jitendra Malik, Christoph Feichtenhofer ICCV, 2021 Paper code Improved Multiscale Vision Transformers for Classification and Detection Yanghao Li, Chao-Yuan Wu, Haoqi Fan, Karttikeya Mangalam, Bo Xiong, Jitendra Malik, Christoph Feichtenhofer Tech Report, 2021 Paper code |

|

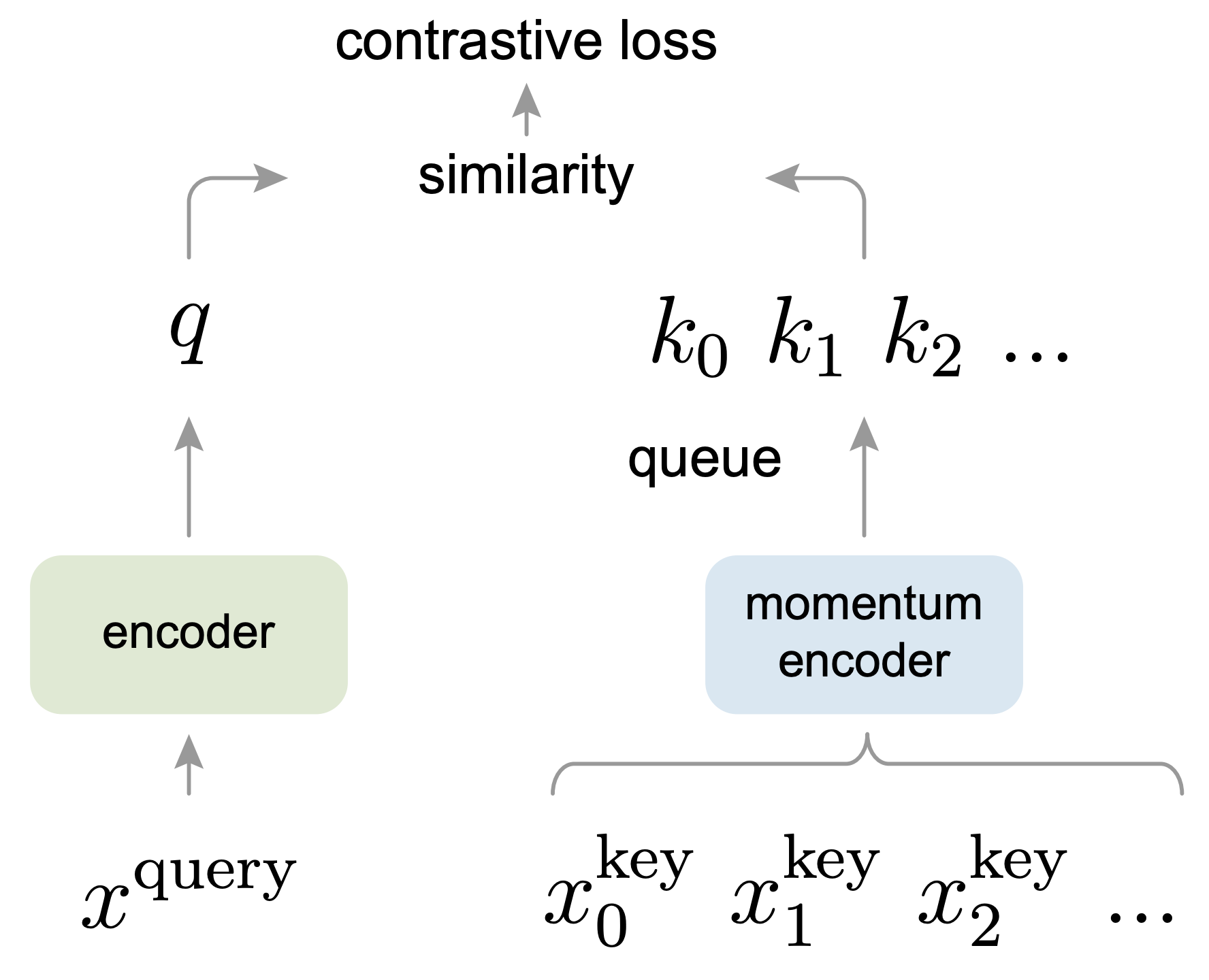

Momentum contrast for unsupervised visual representation learning Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, Ross Girshick Conference on Computer Vision and Pattern Recognition (CVPR), 2020 (Oral) Best Paper Nomination Paper code Improved Baselines with Momentum Contrastive Learning Xinlei Chen, Haoqi Fan, Ross Girshick, Kaiming He 2-Page Tech Report, 2020 Paper code On the Importance of Asymmetry for Siamese Representation Learning Xiao Wang*, Haoqi Fan*, Yuandong Tian, Daisuke Kihara, Xinlei Chen CVPR, 2022 Paper code |

|

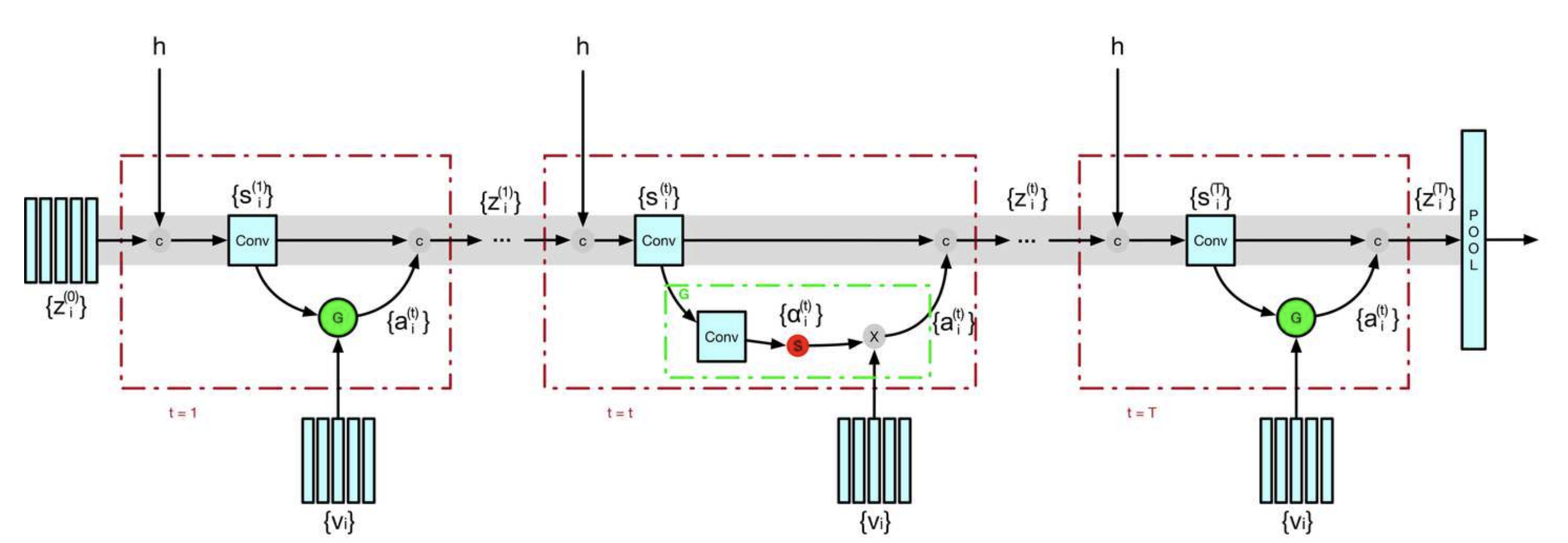

Slowfast networks for video recognition Christoph Feichtenhofer, Haoqi Fan, Jitendra Malik, Kaiming He International Conference on Computer Vision (ICCV), 2019 (Oral) Paper code |

|

Stacked Latent Attention for Multimodal Reasoning Haoqi Fan, Jiatong Zhou Conference on Computer Vision and Pattern Recognition (CVPR), 2018 Paper |

|

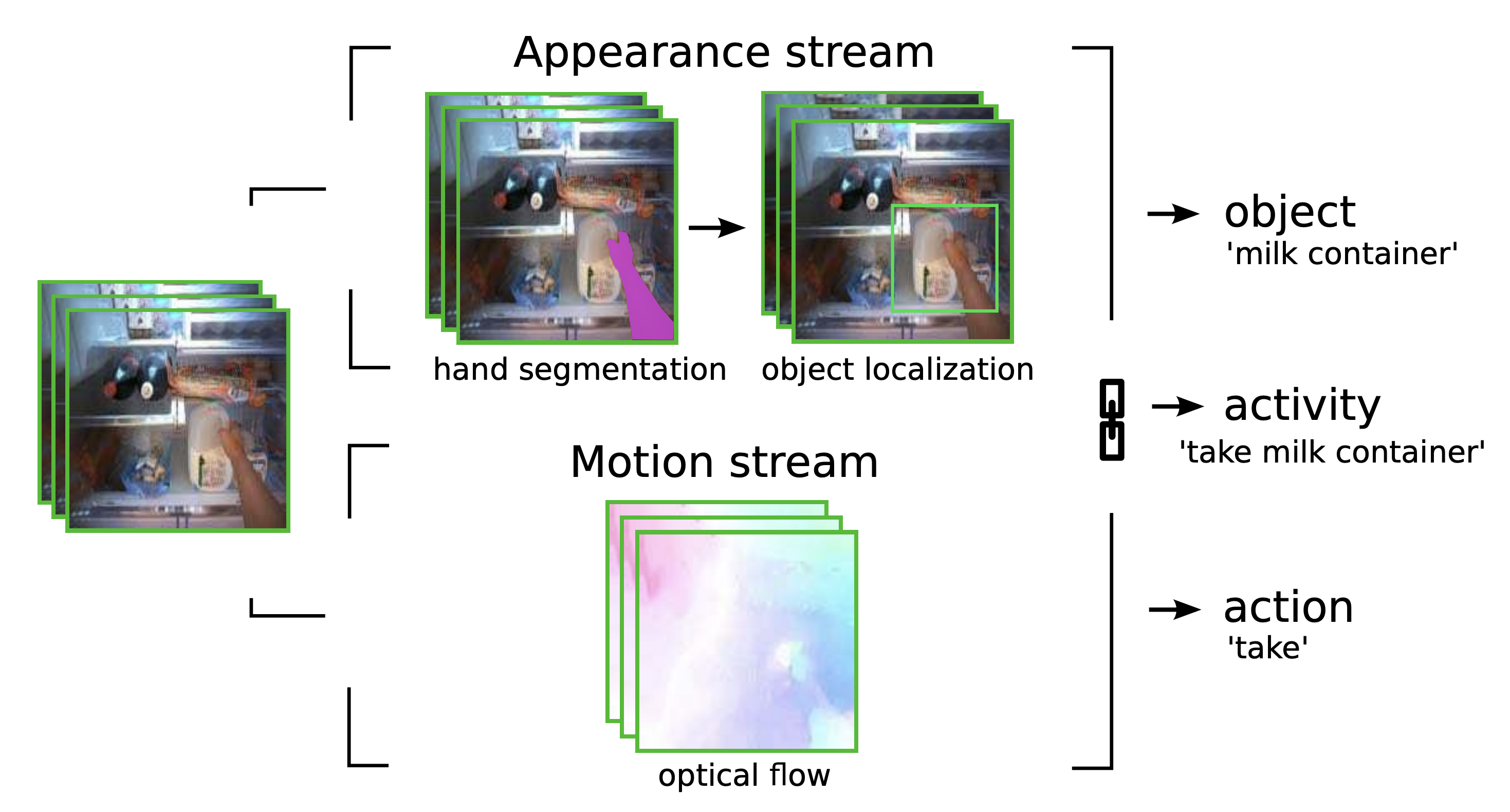

Going deeper into first-person activity recognition Minghuang Ma, Haoqi Fan, Kris M. Kitani Conference on Computer Vision and Pattern Recognition (CVPR), 2016 Paper |

Open Source Projects

|

BAGEL: The Open-Source Unified Multimodal Model Chaorui Deng∗, Deyao Zhu∗, Kunchang Li∗, Chenhui Gou∗, Feng Li∗, Zeyu Wang, Shu Zhong, Weihao Yu, Xiaonan Nie, Ziang Song, Guang Shi§, Haoqi Fan∗†

|

|

PySlowFast: video understanding codebase for state-of-the-art research Haoqi Fan, Yanghao Li, Wan-Yen Lo, Christoph Feichtenhofer

|

|

PyTorchVideo: A Deep Learning Library for Video Understanding Haoqi Fan *, Tullie Murrell *, Heng Wang ‡, Kalyan Vasudev Alwala‡, Yanghao Li‡, Yilei Li‡, Bo Xiong ‡, Nikhila Ravi, Meng Li, Haichuan Yang, Jitendra Malik, Ross Girshick, Matt Feiszli, Aaron Adcock†, Wan-Yen Lo†, Christoph Feichtenhofer †  Paper

Post

Paper

Post

|